How to stop declining of the performance indicators of your ecommerce website and perform optimising page load performance.

So for one the sprints we decided to focus on optimising page load performance – here is the story of how it all worked out.

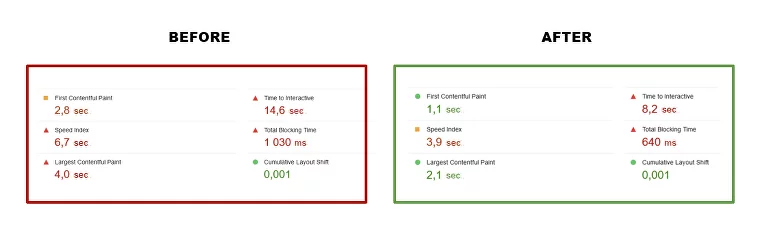

Here is short summary of results:

Not all indicators have reached the green zone yet, but there has been significant improvement for many of them. While further work is being planned, we will explain the steps we took to achieve the current result.

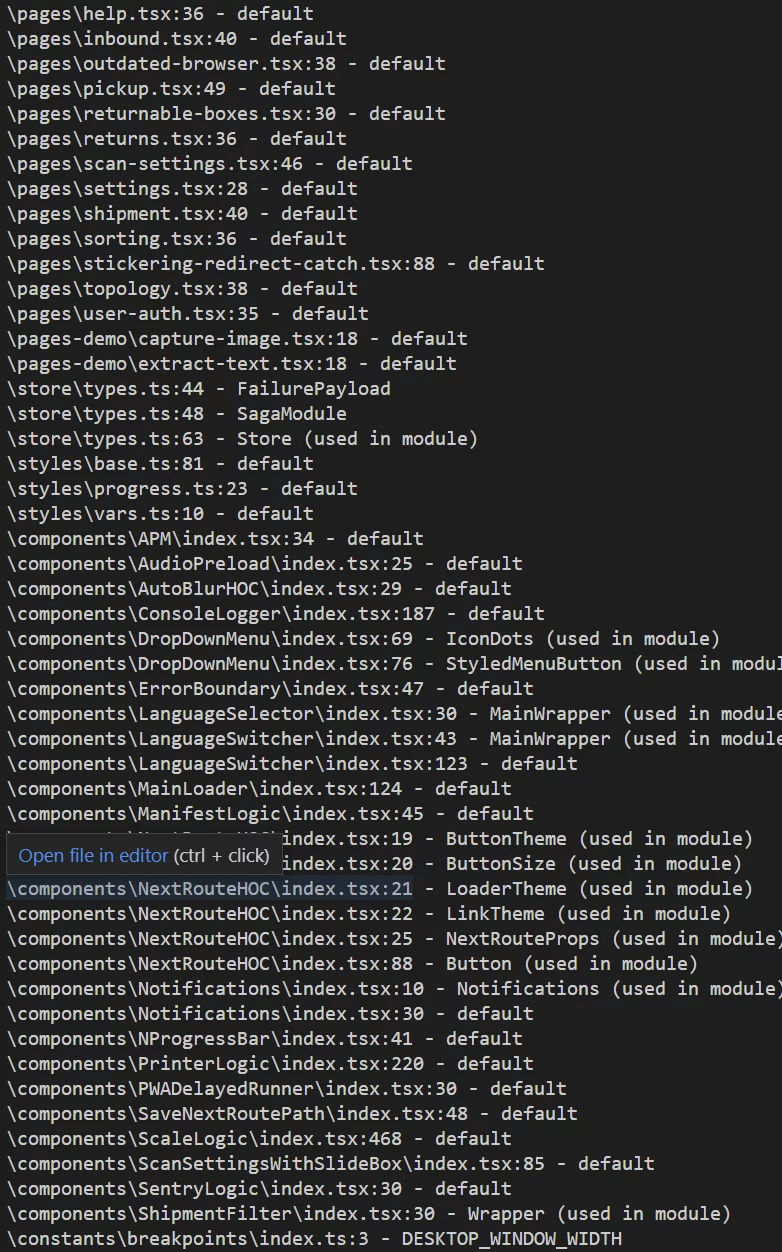

First, we use a service that allows you to quickly find dead exports or code in a project.

Here's an example command for package.json:

"deadcode-check": "npx --yes ts-"deadcode-check": "npx --yes ts-prune -s \"pages/[**/]?

(_app|_document|_error|index)|store/(index|sagas)|styles/global\"" This yields the following result:

After working through the console, there will be a list of problem areas. We go through them manually and remove dead segments.

Another package we use for optimization helps us generate a list of unused npm packages. Then we manually go through the list and remove everything unnecessary, thereby reducing the project's weight and organising things.

Depcheck needs to be run several times because after each run and removal of unnecessary libraries, new ones may appear, which become redundant after the first run. For example, a library removed in the first run may have been used by another library, which is identified as a dead dependency in the second run.

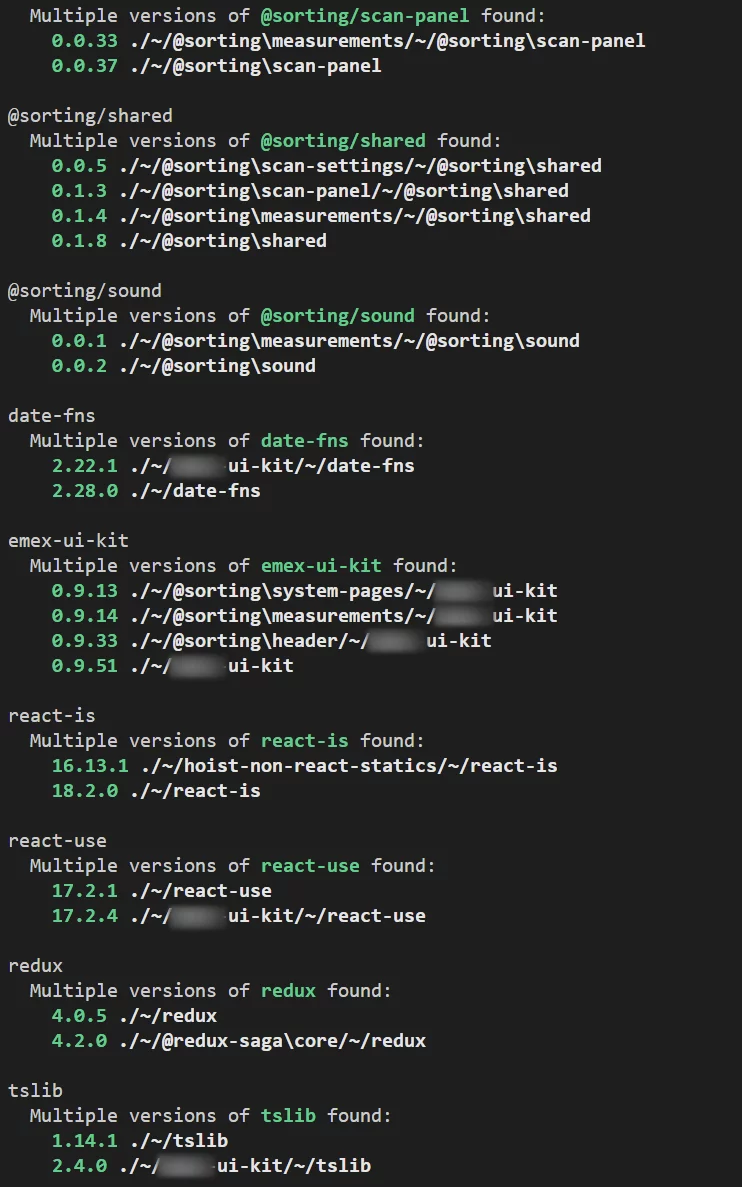

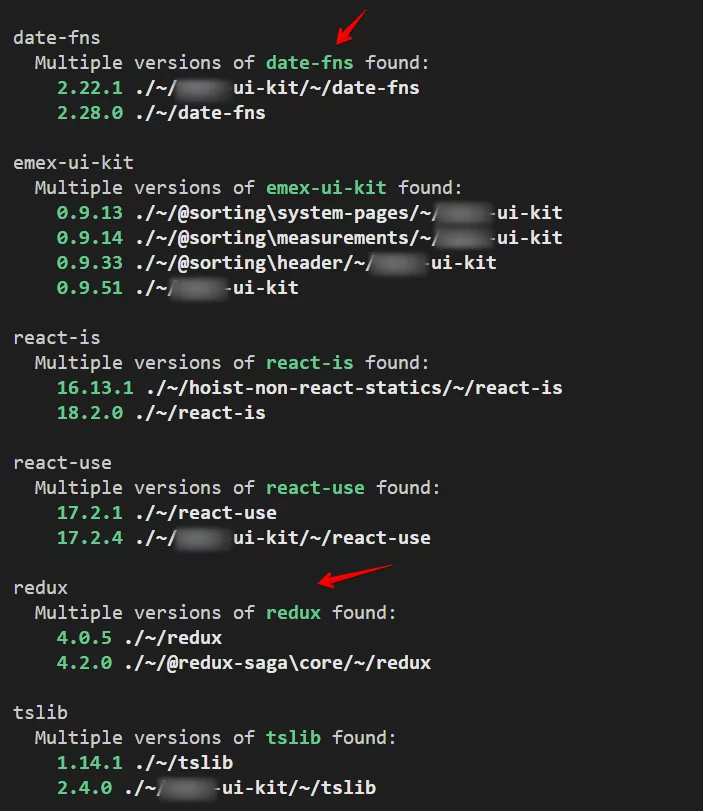

We use a plugin that analyses the project and displays a list of duplicate packages with different versions. Here is an example of the results of its work, using a sorting project as an example:

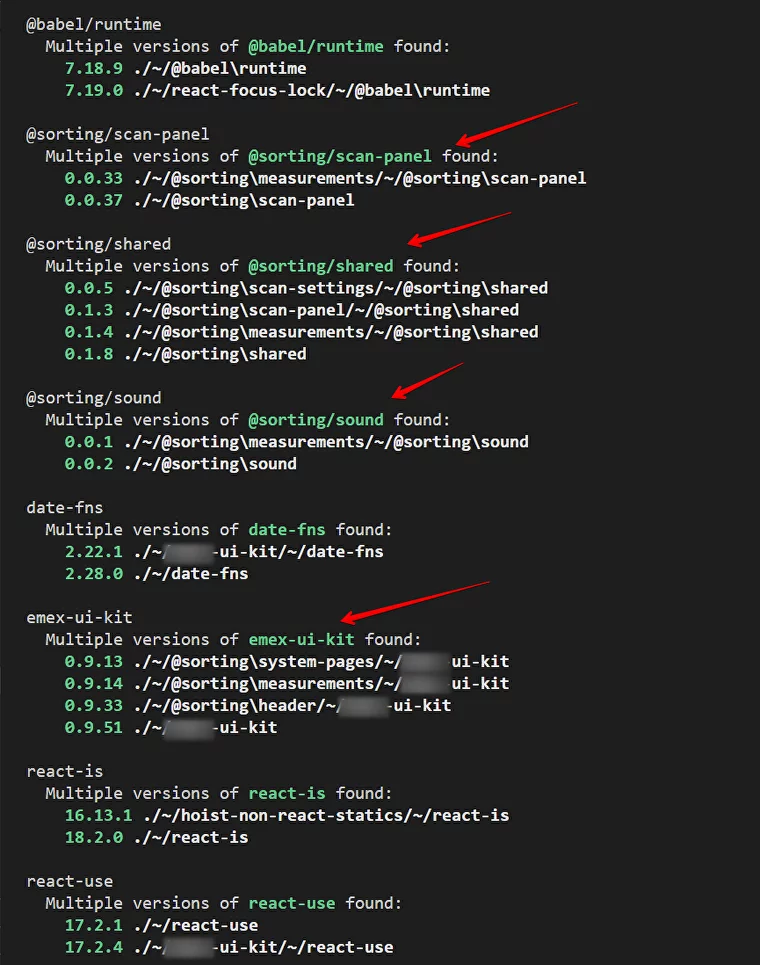

However, the algorithm for further actions may depend on the types of results. For example, consider the following output:

Here, it's clear that the packages need to be updated. By updating the dependencies, you can reduce the bundle's size. Another example:

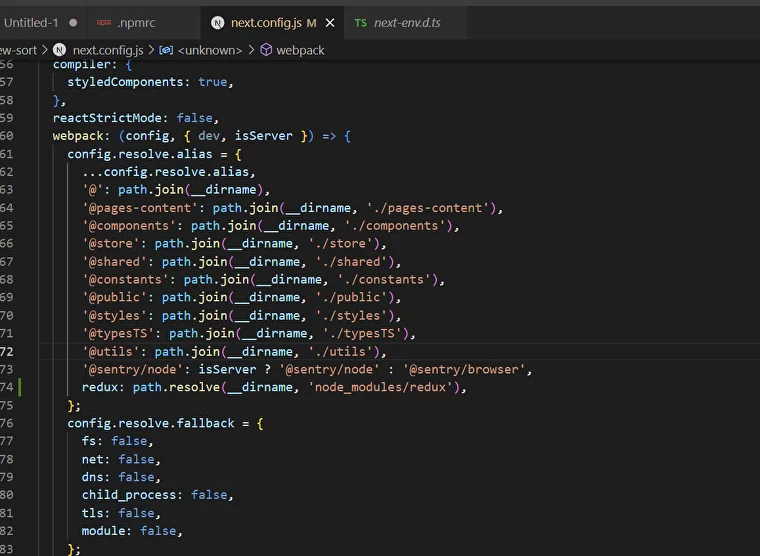

In this case, updates are also required. If it's not clear what and where to update, you can add an alias in the webpack settings. This will instruct the builder which version to update and where to obtain it. Using Redux as an example:

Before optimization, the images on the website were not scaled according to the screen size. We replaced all the images with "next/image." Then, we adjusted the download priorities. Those images that enter the viewport first should be loaded with high priority and without lazy loading—meaning, as quickly as possible. This impacts the site's rendering speed.

Use the "next/image" module for all images.

Introduce modern image formats in place of JPG and PNG. This provides better compression without compromising quality and results in faster loading.

images: {

formats: ['image/avif', 'image/webp'],

}Prioritise the loading of above-the-fold images (those within the viewport when the page initially loads) using the "Priority" property.

For vector images, set the "unoptimized" property.

<Image

scr="/static/icons/mainPage/qualityControl.svg"

width={40}

height={40}

unoptimized

priority

alt="Quality control"

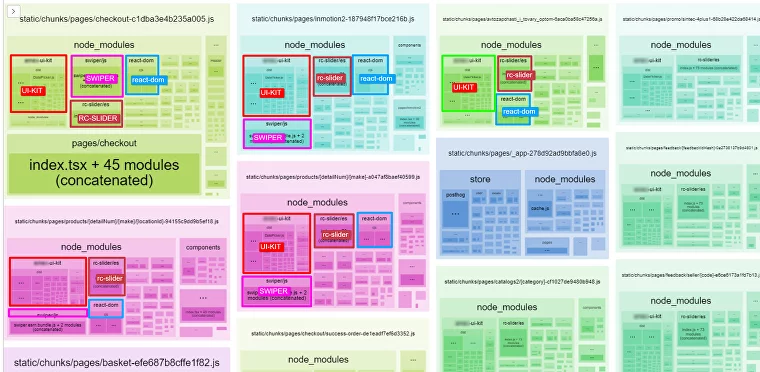

/>It was discovered that there are many duplicates in the code on each page. Components that could have been loaded once, cached in the browser and be used by all pages, were inserted into the code of each page, consuming resources during subsequent page loads.

The code duplication occurred due to disabling the default code splitting algorithm in Next.js. Previous developers used this approach to make Linaria work, which is designed to improve productivity. However, disabling code splitting led to a decrease in performance.

As a result, we removed the line that blocks code splitting, resulting in an almost 50% increase in performance. The built-in Next.js mechanism for breaking JavaScript code into chunks has been tested and recommended for production. There is no need to disable it; otherwise, the code will be duplicated for each page, even though reusable modules (such as React, React-DOM, and UI kit) should be placed in separate (common) chunks, and not loaded again on each page.

You should avoid doing this:

// next.config.js

webpack: (config, { isServer }) => {

config.optimization.splitChunks = false; //

return config;

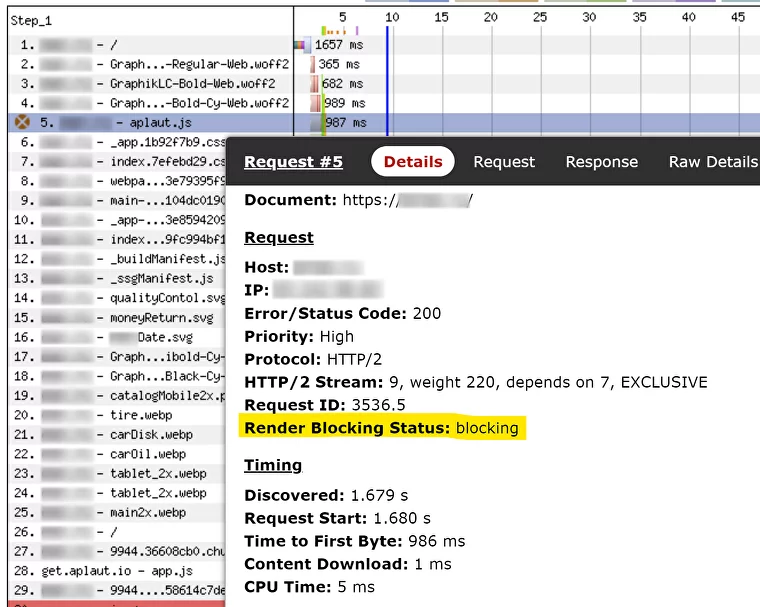

}Next, we used WebPageTest, where you can run tests on website performance and obtain various metrics. After the test, it was discovered that the website's code contains blocking scripts.

The blocking script was modified to load asynchronously and only on those pages where it was necessary. Synchronous loading blocks the download queue. All scripts should be non-blocking.

Example with Aplaut:

Instead of Terser, we use swcMinify to compress JS, saving about 200KB on a large project.

// next.config.js

module.exports = {

swcMinify: true,

}We compile JS only for modern browsers. The list of default browsers in Next can be overridden in your browserslist.

"chrome 61",

"edge 16",

"firefox 60",

"opera 48",

"safari 11"// next.config.js

module.exports = {

experimental: {

legacyBrowsers: false,

},

}To reduce the Total Blocking Time (TBT), third-party scripts for the website (e.g., Google Analytics) should be connected using next/script and appropriate loading strategies, most often afterInteractive. Here's an example of connecting Google Analytics using next/script.

There are components on the site that remain hidden until the user interacts with them. These components are typically found in modal windows and sidebars. For these components, we use Dynamic Imports to reduce the initial amount of JavaScript required to load the page. The JavaScript for loading the hidden component is deferred until the user needs to interact with it.

You can disable ESLint checking in next.config.js. If linting is performed during the commit stage, there's no need to run linting during the build. This nuance doesn't affect the user experience but speeds up the build process.

module.exports = {

eslint: {

// Warning: This allows production builds to successfully complete even if // your project has ESLint errors.

ignoreDuringBuilds: true,

},

}You can also use the --no-lint option in package.json.

"scripts": {

"dev": "ts-node server.ts",

"build": "next build --no-lint",

"build-analyze": "rimraf .next && cross-env ANALYZE=true next build",

"start": "cross-env ts-node server.ts",

"lint": "tsc && eslint **/*.{js,jsx,ts,tsx} --fix"

}If work on a project is conducted regularly with frequent releases, consider setting up performance monitoring after each release. This way, you can promptly address any performance declines after introducing new features, which is more convenient than waiting for a critical drop in performance and optimising the entire system.

To stay proactive, we recommend following a performance improvement checklist by Vitaly Fridman. The checklist evolves each year, ensuring that the advice and approaches it contains remain relevant and suitable for use in projects.

We thank our lead front-end developer, Sergei Pestov, for helping prepare this article!

It's easy to start working with us. Just fill the brief or call us.